composability

Last tended to on October 13, 2024

You have a set of reusable pieces that can be combined to form different, interesting things.

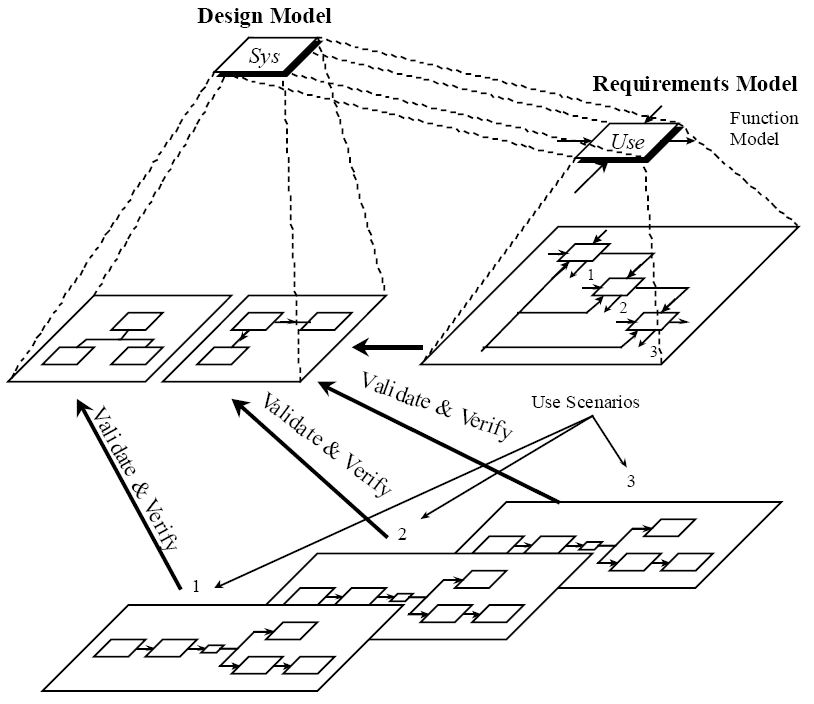

Decomposition of programs into modular, reusable unit is one example of this.

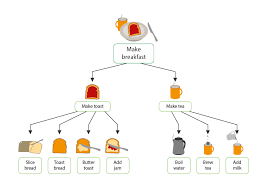

Some methodical activities, such as making breakfast, decompose well into modular activities as well:

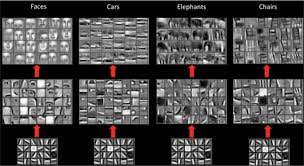

A machine learning example of compositionality

is that a computer vision model’s early layers will implement an edge detector, curve detector, etc., which are modular pieces that are then combined in various ways by higher-level layers to form dog detectors, car detectors, etc.

But ultimately the ways in which machine learning models, at the moment, can “intelligently” combine different pieces and parts is limited. See the tweet about DALL-E trying to stack blocks, bless its heart:

The ways in which #dalle is so incredible (and it is) really put a fine point on the ways in which compositionality is so hard pic.twitter.com/I6DC4g53MK

— David Madras (@david_madras) April 8, 2022

One step towards compositionality is the idea of neuro-symbolic models. Combining logic-based systems with connectionist methods.

compositionality in the human mind

how do our brains combine simple concepts into more complex ones? Even though the input we receive is noisy and finite, we can (in theory) produce an unbounded number of sentences. r/BrandNewSentence is an entertaining example of this.

Related: [1]

Bibliography

Links to “composability”

Counterfactual Generative Networks

Neural networks like to “cheat” by using simple correlations that fail to generalize. E.g., image classifiers can learn spurious correlations with texture in the background, rather than the actual object’s shape; a classifier might learn that “green grass background” => “cow classification.”

This work decomposes the image generation process into three independent causal mechanisms – shape, texture, and background. Thus, one can generate “counterfactual images” to improve OOD robustness, e.g. by placing a cow on a swimming pool background. Related: generative models counterfactuals

modularity

I think it can be really good. Fairly related to compositionality. Will have to hash out what I think the difference is there.

♏️ emacs gets a lot of its power from its modularity. Packages are just little pieces of code– you can add/remove them into your ecosystem as you please. As opposed to proprietary software, where you may be much more limited in the customization that you can do…one piece of functionality for Evernote might not carry over to VSCode might not carry over to your web browser. etc.

^it’s beautiful chaos sometimes, though! Modules designed by tens of thousands of independent humans are bound to be overlapping, redundant, and sometimes confusing to “glue together”…

But luckily emacs has a great community that has helped smooth out the rough edges between packages, and even make them work together to make emacs a better place.

But….is the brain modular? I think it might not quite work that way.

notes I’m actively working on

Mentally bookmarking these pages – think there is some interesting “sauce” to be gotten from each of these.

notes I’m actively working on (hah, recursive)

code comments

📚 book notes

🔲 Conway’s Game of Life

🧐 philosophy

rules of thumb

decomposition

Don’t Repeat Yourself (DRY) works well for code, not people.

blog posts

🟪🟦🟩 Life is a Picture, But You Live in a Pixel — Wait But Why

Towards Causal Representation Learning

Yoshua Bengio talk. Also, the associated paper.

causal representation learning: the discovery of high-level causal variables from low-level observations.

In practice, i.i.d. is a bad assumption. Things don’t stay the same distribution as train. Current DL systems are brittle.

But…what assumption can we replace it with, then?

how does the brain break knowledge apart into “pieces” that can be reused? => compositionality (thinking decomposition into helper functions in programming.) Examples of compositionality include

is it always optimal to compartmentalize / solve one problem at a time? Are there times when we might want to solve multiple problems simultaneously? (is it always optimal to compartmentalize / solve one problem at a time? Are there times when we might want to solve multiple problems simultaneously?)

thinking about this in relation to dynamic programming – I think it serves as a good metaphor for why it can be useful to think about the structure of the problems you’re solving, and if there’s any overlap / reuse that can occur….seems that this is also related to decomposition / modularity

emacs as a playground (emacs provides an powerful set of primitives for editing text)

so, one reason i like emacs is that it provides an incredibly powerful set of primitives for editing text. these primitives are not just confined to the ’writing app’ or the ’coding app’ (though there is not really such a thing as an ‘app’ in emacs), but rather they are present throughout the whole system. kinda like how cmd-C and cmd-V work in every app, but generalized.

examples:

you have primitives for things like deleting, uppercasing, highlighting, jumping up and down, creating a new line, recording a sequence of actions and replaying those back later…all the things that would be useful when editing text. and each of these primitives can be applied dynamically to a range of characters

but then, perhaps even cooler, is that these primitives are like LEGO blocks, or maybe more aptly described like Play-Doh. they can be molded, composed, replaced, or extended into anything the end-user.

- don’t like the default keybindings? (like vim’s better, as i do?) you can change them.

- you can set up a primitive that calls any external API, too. one example i like is github copilot, the AI code completion engine that i use. since everything in emacs is text, and copilot is an engine for text completion, by simply setting up copilot, we automagically get copilot in our code files, in our note files, in our terminal shell, and anywhere else we want it.