🗺 representations

Last tended to on May 27, 2022

Links to “🗺 representations”

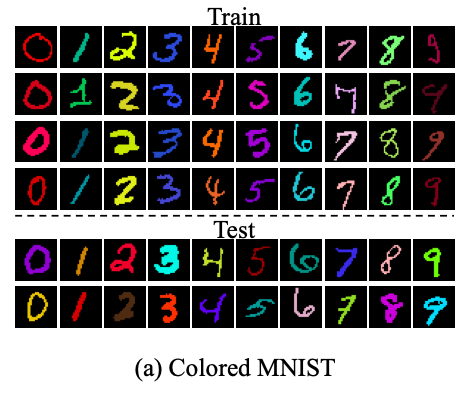

Counterfactual Generative Networks (Problem setting:)

We have images

We might want to disentangle digit shape from color; however, in the train fold, all examples of the same digit are also the same color. (In the test fold, the colors are randomized.) Given this dataset, a “dumb” neural net might learn to do the simplest thing, which is to count how many pixels are a certain color; if a digit is red, it assumes it must be a 0, if it’s green, a 1, and so on, completely ignoring the digit’s shape.

🧐 philosophy

The aim of philosophy, abstractly formulated, is to understand how things in the broadest possible sense of the term hang together in the broadest possible sense of the term.

– Wilfrid Sellars, “Philosophy and the Scientific Image of Man”

Some things we can think about in a philosophical lens…

Do we have free will / agency? Are we just subservient to a deterministic universe? Or both??

Image: Gosper glider gun in conway’s game of life :-)

- How does the mind work? What do our mental representations of the outside world look like?

- mind-body

- science

- life philosophy

Why Graphs? (Introduction > Why Graphs?)

Graphs are a general language for describing and analyzing entities with relationships/interactions. Many domains have a natural relational structure, that lends themselves to a graph representation:

- Physical roads, bridges, tunnels connecting places. 🚗

- Particles, based on their proximities. ⚛️

- Animals in a food ecosystem. 🕸

- Computer networks. 💻

- Knowledge graphs, scene graphs, code graphs…

Graph Representations (Graph Representations)

A few different traditional graph representations we can use.

What causes changes in distribution? (What causes changes in distribution?)

hypothesis to replace i.i.d. assumption: changes in distribution = consequence of an intervention on one/few causes /mechanisms. So, not identically distributed, but pretty similar, if you’re in the right high-level representation space. (E.g. if you put shaded glasses on, all the pixels change in basic RGB space – but in some high-level semantic space, only one bit changed!)

Nancy Kanwisher (A roadmap for research > What’s represented and computed in each region?)

Astonishing success of convnets…analogs between them and the brain perception. Model of how vision “might” work in the brain. (Whoa, that’s fantastic to hear a NEUROSCIENTIST say that. We can consider them a model of the brain!)

Ratan Murty – CNN-based models of FFA, PPA, EBA? Concretely – get CNN activations, and see if you can predict the fusiform face area responses. really really high correlations.

Oh interesting – then you can test the trained model on all of Imagenet, and see what it responds to…ONLY faces. damn.

LMAO can feed things into GANs too.